The Silent Drivers of AI Governance

How Partners, Customers, and Regulators Are Shaping AI Governance in 2026

Guest Contributor

Andrew Clearwater

Partner, Privacy & Cybersecurity, Dentons

Andrew Clearwater is a globally recognized Privacy & AI governance thought leader that has spoken at many of the world's leading data conferences. He's also an inventor on several patents to that help operationalize and automate legal requirements.

Andrew is also a Fellow of Information Privacy (FIP), CIPM, CIPP/US CIPP/E and an LLM in Global Law and Technology.

To stay up to date on AI governance, check out Andrew’s substack for regular updates.

The business case for AI adoption is clear. Enterprises see competitive advantage in automation, efficiency gains, and new capabilities that AI enables. The pressure to move fast is real, and organizations are responding by deploying AI across functions before competitors can establish market position.

The problem is that speed often outpaces governance. Teams are standing up AI capabilities, integrating third-party models, and feeding systems with enterprise data faster than policies, controls, and oversight mechanisms can keep up. The governance gap isn't theoretical. It's the natural result of business urgency meeting organizational complexity.

Now how does this AI governance gap become relevant to most businesses?

When external pressure arrives.

Business partners start including AI provisions in procurement questionnaires. Customers ask how their data feeds AI systems. Regulators announce enforcement priorities. And suddenly organizations realize they can't answer basic questions about what AI they're running, what data it touches, or who approved its deployment.

The Actual Drivers of AI Governance Investment

Regulation gets the headlines, but contractual and commercial implications often surface governance gaps first.

Vendor Contracts

When your enterprise customers start including AI-related provisions in vendor agreements, asking about training data sources and model governance, those questions require answers that many organizations don't yet have.

AI Transparency

Transparency expectations are also evolving across stakeholder groups. Employees want to know how AI affects their work and their data. Customers want assurance that the data they shared under previous terms isn't being used in ways they didn't anticipate. Board members want to understand risk exposure. Each of these audiences requires different communication, but all require the organization to actually know what it's doing with AI.

Legal Landscape

The legal liability landscape extends well beyond AI-specific statutes. Consumer protection law, civil rights obligations, existing privacy regulations, and intellectual property frameworks all apply to AI activities. An organization focused exclusively on AI-specific compliance may miss exposure from these established legal domains.

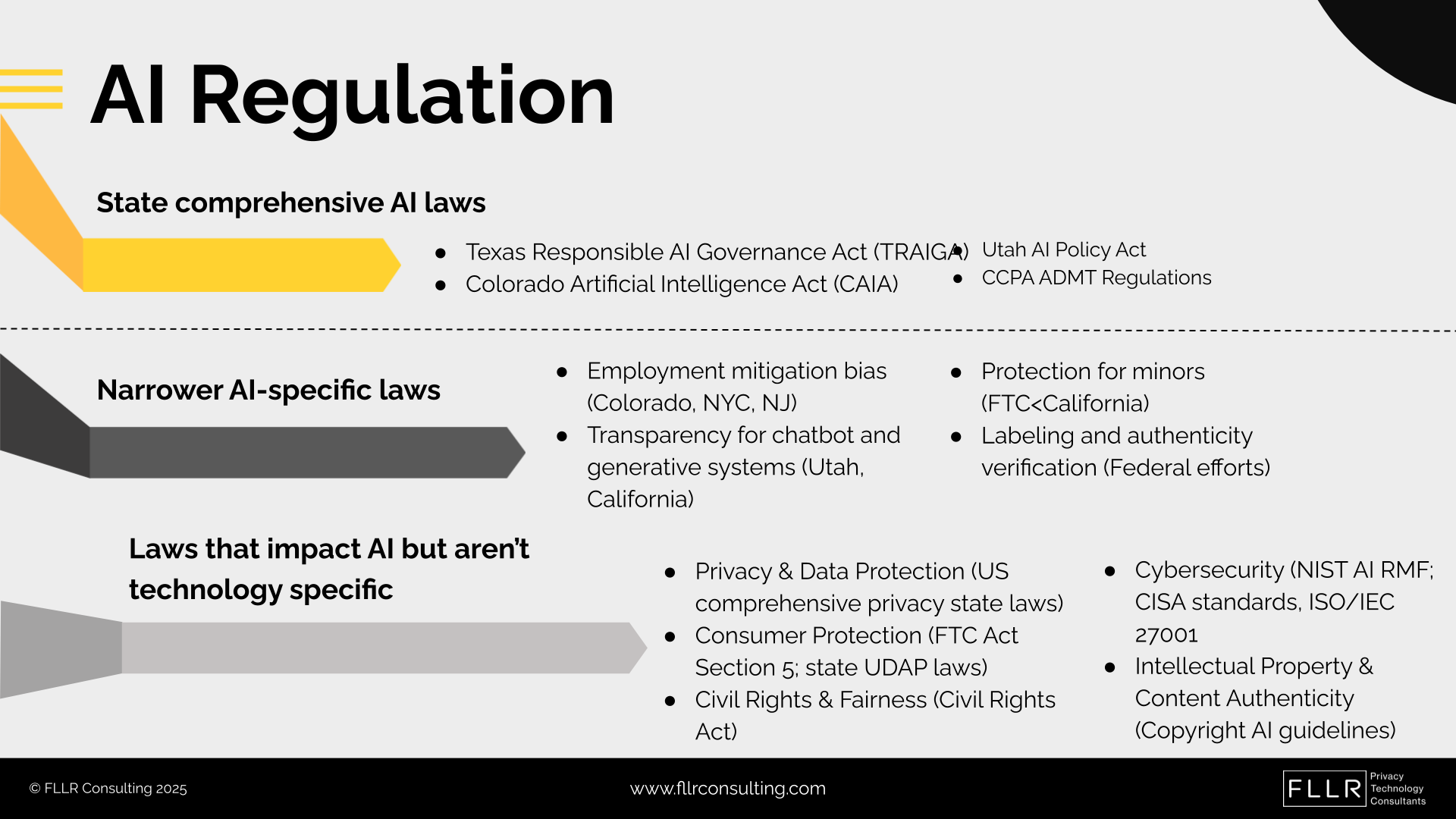

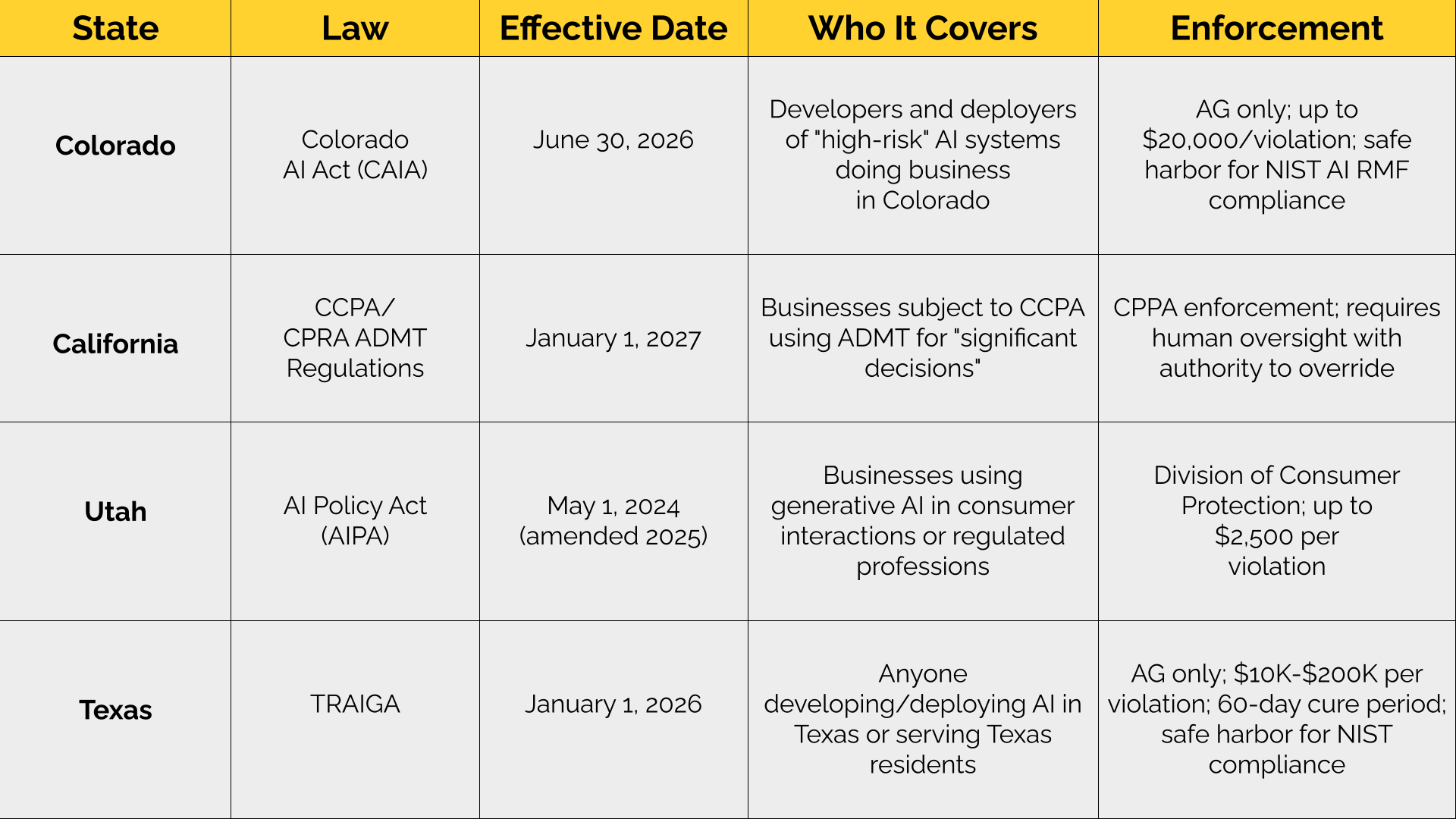

The State of U.S. AI Regulation

Four states have comprehensive AI governance frameworks that warrant attention: Texas, Colorado, Utah, and California. But expecting the same pattern of state-by-state comprehensive law proliferation we saw with privacy would be a mistake. Legislative approaches to AI are more cautious and focused on specific high-risk contexts rather than broad regulatory frameworks.

Beyond comprehensive frameworks, these states are targeting the following specific use cases.

Employment and hiring decisions

When AI systems influence who gets interviewed, who gets promoted, or who gets terminated, transparency and fairness requirements must be applied.

Healthcare pricing and access decisions

Children's data and AI-powered features aimed at minors

Chatbots

Disclosure requirements ensuring consumers know they're interacting with AI rather than humans are spreading.

Mental health applications and AI-powered customer service

Existing Laws Apply to New Technology

The absence of AI-specific regulation doesn't mean AI activities are unregulated. Established legal frameworks already apply:

Privacy laws require understanding data flows, and those flows now include AI training and inference

Consumer protection statutes enforced by the FTC address deceptive practices, and AI systems that produce misleading outputs or make unfair decisions trigger those frameworks

Civil rights law applies to automated decision-making that creates disparate impact

Intellectual property frameworks govern training data sourcing and model outputs

When organizations commit to frameworks and principles, even voluntary ones, those commitments create enforceable obligations. If your privacy notice says you don't use customer data for AI training, that commitment has legal weight. If you've publicly adopted OECD AI principles, deviation from those principles creates reputational and potentially legal exposure.

Enforcement won't wait for AI-specific laws. The FTC has pursued AI-related enforcement under existing authority, with algorithm deletion remedies requiring companies to destroy AI models trained on improperly collected data. State attorneys general are signaling the same approach.

Massachusetts AG Andrea Campbell issued an advisory in April 2024 explicitly stating that existing consumer protection, anti-discrimination, and data security laws apply to AI "just as they would within any other applicable context," and that her office "intends to enforce these laws accordingly."

The advisory lists specific violations including:

Misrepresenting AI reliability

Supplying defective AI systems

Using AI that produces discriminatory results

Deploying deepfakes or voice cloning for fraud

Organizations waiting for AI-specific legislation to build governance programs are misjudging the enforcement timeline.

The ISO 42001 Framework

Organizations facing diverse obligations from multiple sources naturally seek simplification. ISO 42001 provides a standardized framework for AI management systems that can serve as an organizing principle.

The framework creates structure around governance, risk assessment, documentation, and continuous improvement. For organizations already familiar with ISO certification processes through 27001 or 27701, the AI management system follows similar patterns.

Certification provides third-party validation that can simplify customer and partner conversations. Rather than responding to unique questionnaires from each stakeholder, certified organizations can point to their certification as evidence of governance maturity.

But framework adoption shouldn't become a substitute for understanding your actual AI activities. The organizations getting value from ISO 42001 are using it as a structure for genuinely building governance capabilities, not as a badge to display.

Phased Approach to Building Governance

Organizations that successfully build AI governance programs typically follow a progression:

Phase 1: Policy Foundation

Start with an acceptable use policy that defines what AI tools employees can use, what data can flow into AI systems, and what review processes apply to AI outputs. This creates a baseline governance layer even before deeper program development.

Phase 2: Inventory and Mapping

What AI systems are you using? What AI systems are your vendors using on your behalf? What data feeds those systems? Until you can answer these questions comprehensively, risk assessment and management remain incomplete.

Phase 3: Risk and Impact Assessment

Which AI applications are high-risk based on the decisions they influence or the data they process? What controls are appropriate for each risk level? How frequently should you reassess as systems evolve?

Phase 4: Roles and Accountability

Who approves new AI deployments? Who monitors ongoing operations? Who responds when something goes wrong? Governance without clear accountability creates documentation without action.

Phase 5: Incident Response

AI-specific incident response deserves particular attention. The failure modes differ from traditional IT incidents. An AI system producing biased outputs or hallucinated information may not trigger standard monitoring. Organizations need detection and response capabilities tuned to AI-specific risks.

Building your AI governance program? Our team at FLLR Consulting can help structure policy, inventory, and risk assessment approaches that create genuine governance capability.

Reach out today to book a consultation.